It is 42 years old, and still not actionable. The first implementation was 1968 and we have talked about the integration of it to the supply chain for lo these many years. What is it? …point of sale (POS) data systems at retail and the connection of POS data to the supply chain to drive actionable replenishment. Yes, consumer products companies still don’t know how to use POS from the store to power a demand-driven value network. You may be scratching your head and asking why? Let me try to explain.

Interest is High

The topic is hot. Last week, I was asked to share some insights on the use of retail data with the Grocery Manufacturing Information Systems Group (GMA IS Committee). One of my favorite groups, the GMA IS Committee, is an industry share group sponsored by GMA. This week, I also spoke on a panel at MIT on Integrated Data Signals and later this week, I will join my friend Kara Romanow, Consumer Goods Technology (CGT), to help facilitate the CGT downstream data share group. Net/net: the industry is coelescing. While I am sworn to secrecy, and cannot share what happened at the MIT event, I wanted to share my insights from the GMA IS committee discussion.

Why all the interest? Why now? The neeed is greater. Demand volatility is high, commodity inflation fears are growing, and the channel is becoming more complex. A coalition is building. The data is more available, using it helps to improve revenues (as we all know, eeking out growth in this economy is tough) and companies that are using it (even in the form of pilots) are getting returns in weeks. So, why is everyone not using it and why are supply chains not being redesigned to reap the benefits? It does not fit easily into conventional processes or technologies, and needs a new roadmap.

No Clear Path

Consumer products leaders —Coca-Cola, Del Monte, General Mills, Hershey, Procter & Gamble, PepsiCo, and Unilever– have used retail data in different ways. Each is successful in it’s own right; but, today, after five years of experimentation, the efforts are still largely pilots. No company in consumer products has agressively redesigned the value network to use downstream data across multiple functions in a synchronized process. It takes a leap of faith and a path to move forward. After five years of pilot activity, companies by and large, do not have a roadmap to go forward. In talking to the leaders, you will find seven primary use cases:

- Demand Sensing: Replacement of rules-based forecast consumption in conventional APS (the replacement of forecasting mapping of weekly to daily data in Distribution Requirements Planning (DRP) with statistical modeling to improve replenishment.

- Transportation Forecasting: The use of downstream data to improve logistics forecasting. In conventional APS systems, there is no good way to get a origin/destination transportation signal.

- Pull-based Replenishment: Development of an automated pull-based replenishment or Vendor Managed Inventory (VMI) signal. This especially useful in heavily promoted short-life cycle products to synchronize product flows with the ebb and fall of promotional store demand.

- Channel Compliance: Sensing of channel compliance for sales alerting: in-stocks, trade promotion adherence, voids, etc.

- New Product Launch: Quicker reads of new product launch acceptance in the channel.

- Forecast Accuracy: The improvement of tactical planning to improve the Mean Absolute Percentage Error (MAPE) for corporate forecasting.

- Sales Reporting and Category Management: Use of the data in the sales account teams to improve retail interaction

While many other use cases are plausible and talked about, we seldom see the use of downstream data in demand shaping (trade promotion optimization or pricing), social media programs at the store, or in the evaluation of marketing programs.

How do we Seize the Opportunity?

Is the roadmap for the use of downstream data–retail point of sale, warehouse inventory data, retailer shipment data, loyalty data– an incremental roadmap with phased projects or is it a step change requiring a redesign? I argue that it is a step change requiring a redesign. While there a number of pilots in the industry, companies get stuck in the pilot phase because there is no logical place to put the data in conventional supply chain deployments. Consider these facts:

What do we do with it? In short, retailer data does not fit well within conventional enterprise supply chain architectures of Enterprise Resource Planning (ERP and Advanced Planning Systems (APS). It is not an easy answer. The use of downstream data in conventional enterprise architectures is largely like a square peg in a round whole. When APS systems were installed, the focus was on forecasting what manufacturers should make when and what requirements were needed to be met at the distribution centers. Since forecasting starts with the goal in mind, the output of these systems is largely a forecast of “what needs to be assembled, shipped, and manufactured” using a ship from data model. Since downstream data is based on channel locations, the integration of downstream data requires a “ship to model”. (for more on this topic reference the article Crossing the Great Divide). Yes, we can plug it into conventional forecasting systems as an indicator, or map the data from Ship to to Ship From and run a parallel optimization model, but this mapping defeats the purpose of improving channel sensing. Net/net. The first step is to change the forecasting data model to directly use the data using a Ship to forecasting data model

Functional gaps? Most of the work to date has been in the account teams to improve sales reporting or category management. The sales teams know the data, have their own systems, and often lack the trust to share the insights with the larger organization. Companies struggle with how to forecast globally and execute forecasts locally using downstream data. Tensions run high.

Worth the risk? Most companies want to use it, but they want a defined, and certain Return on Investment (ROI). I find this ironic. Companies say that they want to be innovators, but under the next breath, they ask for a proven case study of a definitive ROI. While those that have piloted technologies know that the returns on in weeks, moving from a pilot to mainstream requires money, courage and a leap of faith by the greater organization. It is a bigger project than has currently been attempted by anyone and needs to be funded as pure innovation. The evolution of predictive analytics to drive the next evolution requires co-development with specialized technology providers.

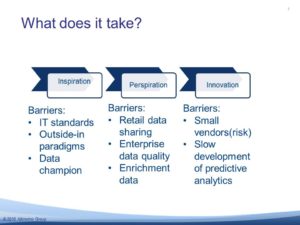

To seize the oppo rtunity, as outlined in figure 1, companies need inspiration, perspiration and innovation. Inspiration– a vision from a line of busines leader– on how the data can improve the value network. Perspiration– a recognition that it takes hard work to scrub the data and build the predictive analytics– to use the data. And, finally, the courage to fund the project as a research and development–innovation initiative knowing that part of the work will be throw-away. The biggest barriers are us. What do I mean? To effectively use the data, traditional definitions of supply chain planning and conventional notions of enterprise architectures for the integrated supply chain must be discarded.

rtunity, as outlined in figure 1, companies need inspiration, perspiration and innovation. Inspiration– a vision from a line of busines leader– on how the data can improve the value network. Perspiration– a recognition that it takes hard work to scrub the data and build the predictive analytics– to use the data. And, finally, the courage to fund the project as a research and development–innovation initiative knowing that part of the work will be throw-away. The biggest barriers are us. What do I mean? To effectively use the data, traditional definitions of supply chain planning and conventional notions of enterprise architectures for the integrated supply chain must be discarded.

What would a Roadmap look like?

Since it has not been done before, this is all conjecture; but based on studying the many pilots, I think that the roadmap has nine phases. <What do you think? Did I miss any?> Here are the ones that I think make sense: Get close to your customer. Understand what is possible. Build a customer data team within a front-office group and begin to understand the data, and use it. Establish incentives for retailers to share data (strive for daily data daily) and give them feedback on data integrity, cleanliness and usefulness. Retail benchmarks are hard to get and retailers will take the initiatives more seriously if it is tied to pricing or joint incentives.

Train the sales team on how to have the downstream data discussion. Work with teams to get data and incent retailers to share daily data daily.

Get good at using the data in the sales relationship. Learn to talk the retailer’s language and understand their pain points. Use cross-functional supply chain teams as over-lay groups to focus on reducing demand latency, the bullwhip effect, and obsolete inventory. Measure it, and sell the concepts cross-functionally. Investigate buying a DSR and installing the sales team specific reporting tools (e.g. Lumidata for Target, or Wal-Mart specific databases) as appliances on the database. For companies with smaller requirements — a rule of thumb is less than 10 Terabytes– consider Relational Solutions, Shiloh, Visionchain or VMT on Teradata or Oracle databases. No matter which tool is selected, avoid aggregating the data and focus on data cleansing, demand syncrhonization and enrichment. Business intelligence tools like IRI Liquid Data, Nielsen Answers or DME, or SAP BW/Business Objectshave not proven equal to the task. Likewise, be cautious in purchasing the Oracle Demand Signal Repository. It is new to the market with uneven results in client references.

Build an innovation team. Create a cross-functional group led by a line of business leader to champion the use of the data and inspire new ideas. Build a binding coalition horizontally across the organization to reduce waste and improve the customer response by reducing demand latency and taming the bullwhip affect. Design push-pull decoupling points and do co-development with predictive analytic vendors — Enterra Solutions, Mu Sigma, Opera Solutions, Revolution Analytics, SAS, and Terra Technology to build new sensing capabilities. (Note to the reader: this is a cadre of vendors. The only thing that they have in common is an understanding of predictive analytics. Only Terra Technology today has experience with deep optimization on downstream data.)

Design push/pull decouping points. As the downstream data is used in pull-based replenishment pilots, the supply chain needs to be designed and incentives aligned for PULL versus PUSH. <This is not trivial and will challenge conventional wisdom on supply chain excellence.> Design the flows based on both demand variability and velocity recognizing that there are multiple supply chains based on these rhythms and cycles. My favorite tools to do this type of analysis are Logictools (now IBM), Optiant (now Logility) and SmartOps. Increase the frequency of analysis to determine the right push-pull decoupling points as the systems mature.

Build demand execution processes. These demand sensing processes enable the use of the downstream data in the operational horizon (0-12 weeks) and synchronize this rich, very granular data with longer term tactical forecasting processes. These demand sensing processes will help to flag gaps in S&OP execution, improve the replenishment signal, and provide early alerting to the organization of retail consumption trends. Synchronize–not tightly integrate– Vendor Managed Inventory (VMI) and demand-driven replenishment using downstream data with corporate forecasting. Use multiple data streams and deep statistics to determine the best predictors — store level POS, retail withdrawal, shipments, or orders– to forecast demand. As these systems mature, use Distribution Requirements Planning (DRP) to only push to the distribution mixing center and demand execution processes to pull inventory to the store.

Use heat maps in a control room environment to track track voids, out-of-stocks and compliance issues by geo-code. Focus on eliminating root causes and improving demand execution processes.

Get good at tactical forecasting. To use downstream data invest in deep forecasting analytics that have pattern recognition and scalability to recognize the trends in downstream data. Build a channel model (a ship to data model) and focus on getting good at predicting the baseline forecast and demand lift factors. My favorite tools to accomplish this are SAS Demand-driven Forecasting and Oracle’s forecasting tool purchased from Demantra.

Synchronize trade forms. Work with retailers to develop a b2b system of record for trade promotions –trade forms– either using their systems or Tradepoint purchased by Demandtec. Closely coupld this data to corporate forecasting and demand execution processes.

Build robust demand translation processes. Analyze the network weekly to determine the right mapping between channel forecasting (ship to) and the physical distribution network (ship from) requirements. Staff a small team to do this manually using network modeling tools to translate channel demand to manufacturing requirements. Use this team to help translate demand requirements to factory scheduling to improve the translation of the true demand signal to the manufacturing plants and co-packers.

Design a demand visibility signal. Since forecasts have to be specific with the end in mind, use downstream data to forecast the channel, transportation requirements (origin/destination), co-packer requirements. Focus on how to forecast to give usable data –right context of attributes and data model– to the downstream customers.

What is your Roadmap?

Would love your thoughts. What is your recommended roadmap for the use of retail data. I look forward to your feedback.

For additional reading on the downstream data topic, consider reading these articles on the blog:

http://www.supplychainshaman.com/demanddriven/start-a-new-conversation-free–

the-data-to-answer-the-questions-that-you-dont-know-to-ask/

http://www.supplychainshaman.com/new-technologies/is-this-the-future-of-down

stream-data/

http://www.supplychainshaman.com/supply-chain-excellence/crossing-the-great–

divide/